Robots.txt File Explained

June 28th, 2018 / By Andrew Currie

June 28th, 2018 / By Andrew Currie

The robots.txt file is a standard that allows webmasters to convey to search engines whether or not their website (or specific pages or directories of it) should allow the crawling of search engine spiders like Googlebot, Bing Bot, MSN Bot and the Baidu Spider. Simply put, the robots.txt file lets you decide whether or not your website should be indexed by search engines.

How-To Use The Robots.txt File

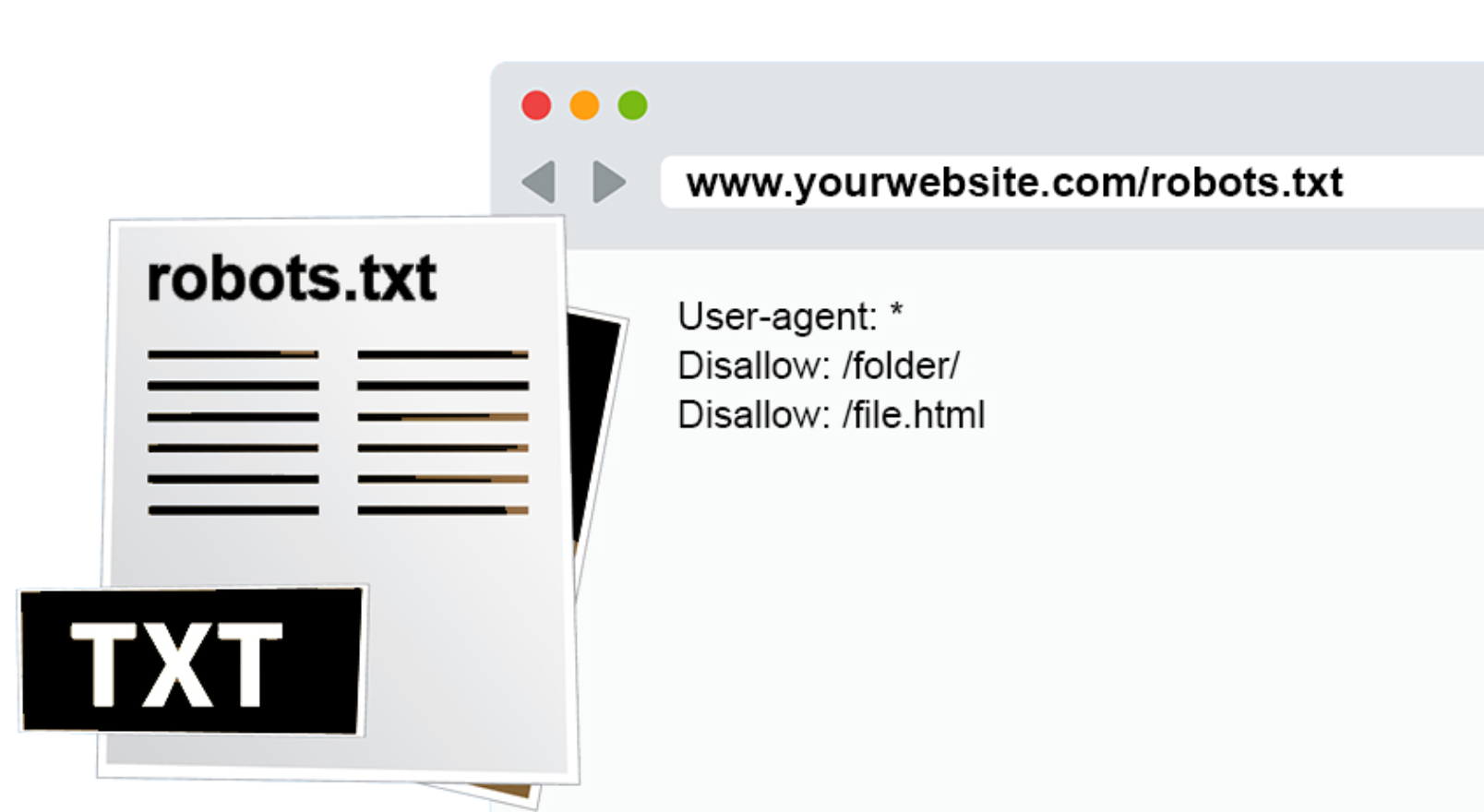

The robots.txt file is placed in the document root of your website and should always be named in all lowercase letters. The syntax used for the robots.txt is pretty straight forward and easy-to-use. Below are some common examples you can follow to configure the robots.txt file for your website(s).

User-agent: *Disallow:

User-agent: *Disallow: /User-agent: *Disallow: /myfile.htmlUser-agent: *Disallow: /myfolder/You can add as many additional ‘Disallow:’ rules as needed, just add a new line for each rule. Below is an example with several pages and folders blocked:

User-agent: *Disallow: /api.phpDisallow: /admin.phpDisallow: /admin/Disallow: /api/User-agent: *Disallow: /api.phpDisallow: /admin.phpDisallow: /api/Disallow: /admin/Disallow: /local/search/The robots.txt file is not the only way you can communicate to the search engine bots. You can also use the robots META tag. For example, If you wanted a single page on your website to be excluded from search engines, adding the following META tag to the section of your page would do the trick:

<meta name="robots" content="noindex">